Spark users want convenience in the cloud — here are new ways they may get it

Over the course of the last couple of years, Apache Spark has enjoyed explosive growth in both usage and mind share. These days, any self-respecting big data offering is obliged to either connect to or make use of it.

Now comes the hard part: Turning Spark into a commodity. More than that, it has to live up to its promise of being the most convenient, versatile, and fast-moving data processing framework around.

There are two obvious ways to do that in this cloud-centric world: Host Spark as a service or build connectivity to Spark into an existing service. Several such approaches were unveiled this week at Spark Summit 2016, and they say as much about the companies offering them as they do Spark’s meteoric ascent

Microsoft

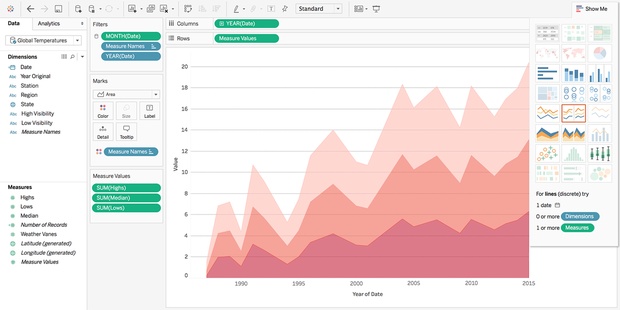

Microsoft has pinned a growing share of its future on the success of Azure, and in turn on the success of Azure’s roster of big data tools. Therefore, Spark has been made a first-class citizen in Power BI, Azure HDInsight, and the Azure-hosted R Server.

Power BI is Microsoft’s attempt — emphasis on “attempt” — at creating a Tableau-like data visualization service, while Azure HDInsight is an Azure-hosted Hadoop/R/HBase/Storm-as-a-service offering. For tools like those, the lack of Spark support is like a bike without pedals.

Microsoft is also rolling the dice on a bleeding-edge Spark feature, the recently revamped Structured Streaming component that allows its data to stream directly into Power BI. Structured Streaming is not only a significant upgrade to Spark’s streaming framework, it is a competitor to other data streaming technologies (such as Apache Storm). So far it’s relatively unproven in production, and already faces competition from the likes of Project Apex.

This is more a reflection of Microsoft’s confidence in Spark generally than in Structured Streaming specifically. The sheer amount of momentum around Spark ought to ensure that any issues with Structured Streaming are ironed out in time — whether or not Microsoft contributes any direct work to such a project.

IBM

IBM’s bet on Spark has been nothing short of massive. Not only has Big Blue re-engineered some of its existing data apps with Spark as the engine, it’s made Spark a first-class citizen on its Bluemix PaaS and will be adding its SystemML machine learning algorithms to Spark as open source. This is all part of IBM’s strategy to shed its mainframe-to-PC era legacy and become a cloud, analytics, and cognitive services giant.

Until now, IBM has leveraged Spark by making it a component of already established services — e.g., Bluemix. IBM’s next step, though, will be to provide Spark and a slew of related tools in an environment that is more free-form and interactive: the IBM Data Science Experence. It’s essentially an online data IDE, where a user can interactively manipulate data and code — Spark for analytics, Python/Scala/R for programming — add in data sources from Bluemix, and publish the results for others to examine.

If this sounds a lot like Jupyter for Python, that is one of the metaphors IBM had in mind — and in fact, Jupyter notebooks are a supported format. What’s new is that IBM is trying to expose Spark (and the rest of its service mix) in a way that complements Spark’s vaunted qualities — its overall ease of use and lowering of the threshold of entry for prospective data scientists.

Snowflake

Cloud data warehouse startup Snowflake is making Spark a standard-issue component as well. Its original mission was to provide analytics and data warehousing that spared the user from the hassle of micromanaging setup and management. Now, it’s giving Spark the same treatment: Skip the setup hassles and enjoy a self-managing data repository that can serve as a target for, or recipient of, Spark processing. Data can be streamed into Snowflake by way of Spark or extracted from Snowflake and processed by Spark.

Spark lets Snowflake users interact with their data in the form of a software library rather than a specification like SQL. This plays to Snowflake’s biggest selling point — automated management of scaling data infrastructure — rather than merely providing another black-box SQL engine.

Databricks

With Databricks, the commercial outfit that spearheads Spark development and offers its own hosted platform, the question has always been how it can distinguish itself from other platforms where Spark is a standard-issue element. The current strategy: Hook ’em with convenience, then sell ’em on sophistication.

Thus, Databricks recently rolled out the Community Edition, a free tier for those who want to get to know Spark but don’t want to monkey around with provisioning clusters or tracking down a practice data set. Community Edition provides a 6GB microcluster (it times out after a certain period of inactivity), a notebook-style interface, and several sample data sets.

Once people feel like they have a leg up on Spark’s workings, they can graduate to the paid version and continue using whatever data they’ve already migrated into it. In that sense, Databricks is attempting to capture an entry-level audience — a pool of users likely to grow with Spark’s popularity. But the hard part, again, is fending off competition. And as Spark is open source, it’s inherently easier for someone with far more scale and a far greater existing customer base to take all that away.

If there’s one consistent theme among these moves, especially as Spark 2.0 looms, it’s that convenience matters. Spark caught on because it made working with gobs of data far less ornery than the MapReduce systems of yore. The platforms that offer Spark as a service all have to assume their mission is twofold: Realize Spark’s promise of convenience in new ways — and assume someone else is also trying to do the same, only better.

Source: InfoWorld Big Data